Climate Sensitivity: The Skeptic Endgame

Posted on 2 March 2011 by dana1981

With all of the distractions from hockey sticks, stolen emails, accusations of fraud, etc,. it's easy to lose sight of the critical, fundamental science. Sometimes we need to filter out the nonsense and boil the science down to the important factors.

One of the most popular "skeptic" arguments is "climate sensitivity is low." It's among the most commonly-used arguments by prominent "skeptics" like Lindzen, Spencer, Monckton, and our Prudent Path Week buddies, the NIPCC:

"Corrected feedbacks in the climate system reduce climate sensitivity to values that are an order of magnitude smaller than what the IPCC employs."

There's a good reason this argument is so popular among "skeptics": if climate sensitivity is within the IPCC range, then anthropogenic global warming "skepticism" is all for naught.

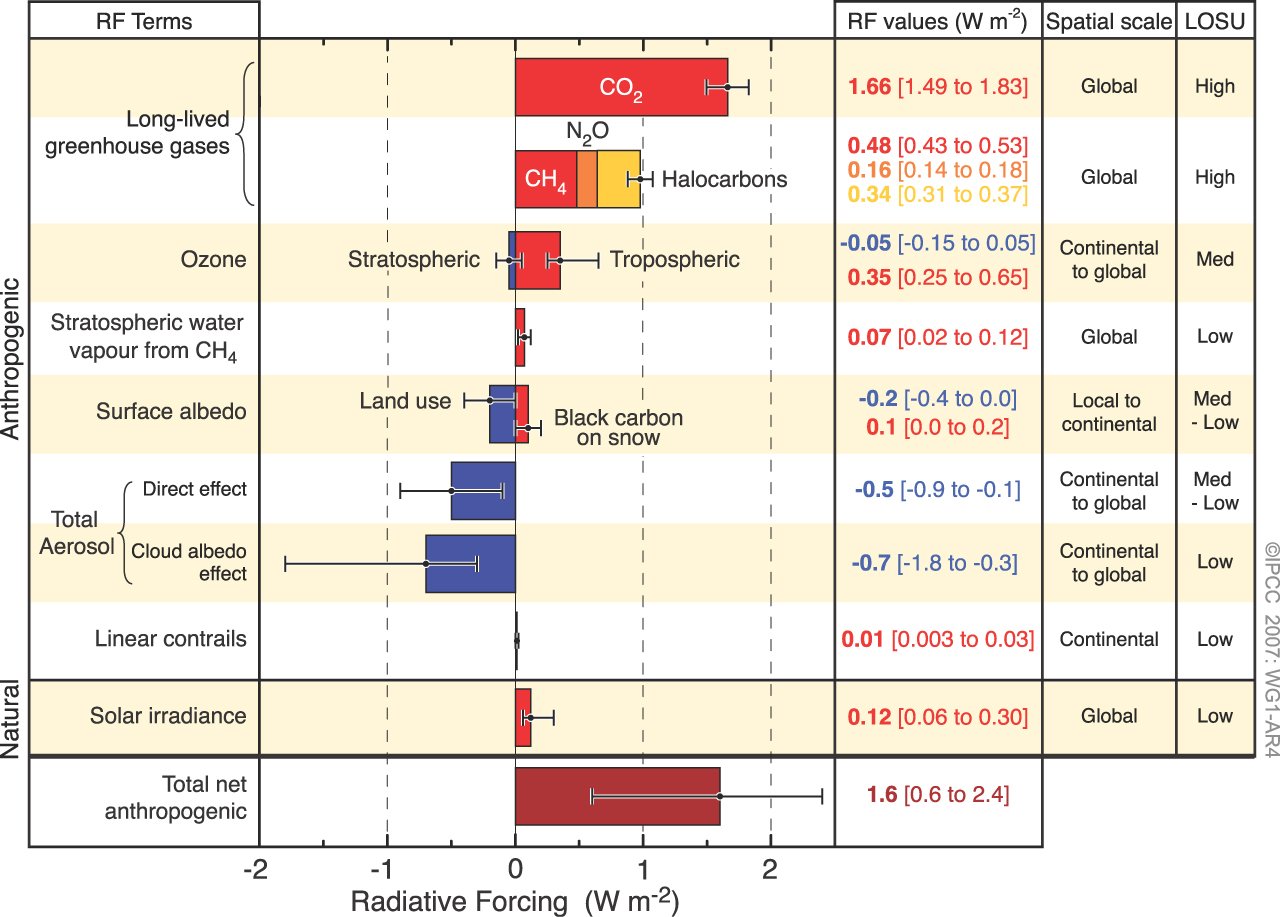

As we showed in the Advanced 'CO2 effect is weak' rebuttal, a surface temperature change is calculated by multiplying the radiative forcing by the climate sensitivity parameter. And the radiative forcing from CO2, which is determined from empirical spectroscopic measurements of downward longwave radiation and line-by-line radiative transfer models, is a well-measured quantity known to a high degree of accuracy (Figure 1).

So we know the current CO2 radiative forcing (now up to 1.77 W/m2 in 2011 as compared to 1750), and we know what the radiative forcing will be for a future CO2 increase, to a high degree of accuracy. This means that the only way CO2 can't have caused significant global warming over the past century, and that it will not continue to have a significant warming effect as atmospheric CO2 continues to increase, is if climate sensitivity is low.

Global Warming Thus Far

If the IPCC climate sensitivity range is correct, if we were to stabilize atmospheric CO2 concentrations at today's levels, once the planet reached equilibrium, the radiative forcing would have caused between 0.96 and 2.2°C of surface warming with a most likely value of 1.4°C. Given that the Earth's average surface temperature has only warmed 0.8°C over the past century, it becomes hard to argue that CO2 hasn't been the main driver of global warming if the IPCC climate sensitivity range is correct.

For the equilibrium warming from the current CO2 radiative forcing to be as small as 0.5°C, the climate sensitivity could only be half as much as the lower bound of the IPCC range (approximately 1°C for a doubling of atmospheric CO2). In short, there are only two ways to argue that human CO2 emissions aren't driving the current global warming. In addition to obviously needing some 'natural' effect or combination of natural effects exceeding the CO2 radiative forcing, "skeptics" also require that either:

- Climate sensitivity is much lower than the IPCC range.

- A very large cooling effect is offsetting the CO2 warming.

The only plausible way the second scenario could be true is if aerosols were offsetting the CO2 warming, and in addition some unidentified 'natural' forcing is having a greater warming effect than CO2. The NIPCC has argued for this scenario, but it contradicts the arguments of Richard Lindzen, who operates under the assumption that the aerosol forcing is actually small.

In the first scenario, "skeptics" can argue that CO2 does not have a significant effect on global temperatures. The second scenario requires admitting that CO2 has a significant warming effect, but finding a cooling effect which could plausibly offset that warming effect. And to argue for continuing with business-as-usual, the natural cooling effect would have to continue offseting the increasing CO2 warming in the future. Most "skeptics" like Lindzen and Spencer rightly believe the first scenario is more plausible.

Future Global Warming

We can apply similar calculations to estimate global warming over the next century based on projected CO2 emissions. According to the IPCC, if we continue on a business-as-usual path, the atmospheric CO2 concentration will be around 850 ppm in 2100.

If we could stabilize atmospheric CO2 at 850 ppm, the radiative forcing from pre-industrial levels would be nearly 6 W/m2. Using the IPCC climate sensitivity range, this corresponds to an equilibrium surface warming of 3.2 to 7.3°C (most likely value of 4.8°C). In order to keep the business-as-usual warming below the 2°C 'danger limit', again, climate sensitivity would have to be significantly lower than the IPCC range (approximately 1.2°C for a doubling of atmospheric CO2).

Thus it becomes very difficult to justify continuing in the business-as-usual scenario that "skeptics" tend to push for, unless climate sensitivity is low. So what are the odds they're right?

Many Lines of Evidence

The IPCC estimated climate sensitivity from many different lines of evidence, including various different instrumental observations (AR4, WG1, Section 9.6.2).

"Most studies find a lower 5% limit of between 1°C and 2.2°C, and studies that use information in a relatively complete manner generally find a most likely value between 2°C and 3°C…. Results from studies of observed climate change and the consistency of estimates from different time periods indicate that ECS [Equilibrium Climate Sensitivity] is very likely larger than 1.5°C with a most likely value between 2°C and 3°C…constraints from observed climate change support the overall assessment that the ECS is likely to lie between 2°C and 4.5°C with a most likely value of approximately 3°C.”

The IPCC also used general circulation models (GCMs) to estimate climate sensitivity (AR4, WG1, Chapter 10),

“Equilibrium climate sensitivity [from GCMs] is found to be most likely around 3.2°C, and very unlikely to be below about 2°C…A normal fit yields a 5 to 95% range of about 2.1°C to 4.4°C with a mean value of equilibrium climate sensitivity of about 3.3°C (2.2°C to 4.6°C for a lognormal distribution, median 3.2°C)”

“the global mean equilibrium warming for doubling CO2...is likely to lie in the range 2°C to 4.5°C, with a most likely value of about 3°C. Equilibrium climate sensitivity is very likely larger than 1.5°C.”

IPCC AR4 WG1 Chapter 10 also contains a nice figure summarizing these many different lines of evidence (Figure 2). The bars on the right side represent the 5 to 95% uncertainty ranges for each climate sensitivity estimate. Note that every single study puts the climate sensitivity lower bound at no lower than 1°C in the 95% confidence range, and most put it no lower than 1.2°C.

Figure 2: IPCC climate sensitivity estimates from observational evidence and climate models

Knutti and Hegerl (2008) arrive at the same conclusion as the IPCC, similarly examining many lines of observational evidence plus climate model results (Figure 3).

Game Over?

To sum up, the "skeptics" need climate sensitivity to be less than 1.2°C for a doubling of CO2 to make a decent case that CO2 isn't driving global warming and/or that we can safely continue with business-as-usual. However, almost every climate sensitivity study using either observational data or climate models puts the probability that climate sensitivity is below 1.2°C at 5% or less.

The bottom line is that the 1.77 W/m2 CO2 forcing has to go somewhere. The energy can't just disappear, so the only realistic way it could have a small effect on the global temperature is if climate sensitivity to that forcing is low.

Thus it's clear why the "skeptics" focus so heavily on "climate sensitivity is low"; if it's not, they really don't have a case. Yet unless estimates from climate models and every line of evidence using numerous lines of empirical data are all biased high, there is less than a 5% chance the "skeptics" are right on this critical issue.

I don't like those odds.

Arguments

Arguments

0

0  0

0 Greenland temperatures during the last 100 kyears -- click for larger version

If we assume a scenario under which atmospheric CO2 concentration was constant for a long time (presumably at pre-industrial level, let's say at 280 ppmv) then started to increase exponentially at the rate observed, we get an excitation that is 0 for dates before 1934 and is α(t-1934) for t > 1934. The artificial sharp transition introduced in 1934 this way does not have much effect on temperature response at later dates.

If global climate responds to an excitation as a first order linear system with relaxation time τ, rate of global average temperature change is αλ(1-e-(t-1934)/τ) for a date t after 1934. As the expression in parentheses is smaller than 1, this rate can't possibly exceed αλ = 0.018°C/year. It means global average temperature can't increase at a rate more than 1.8°C/century even in 2100, which means less than 1.6°C increase relative to current global average temperature, no matter how small τ is supposed to be.

However, a short relaxation time is unlikely, because it takes (much) time to heat up the oceans due to their huge thermal inertia.

For example if τ = 500, current rate of change due to CO2 forcing is 0.26°C/century while in 2100 it is 0.51°C/century (according to Hansen & Sato, of course). However, ocean turnover time being several millennia, we have probably overestimated the actual rates. It means that most of the warming observed during the last few decades is due to internal noise of the climate system, not CO2.

Anyway, the exponential increase of CO2 itself can't go on forever simply because technology is changing all the time on its own, even with no government intervention whatsoever. Therefore it should follow a logistic curve. If the epoch of CO2 increase is substantially shorter than the relaxation time of the climate system, the peak rate of change due to CO2 becomes negligible.

Greenland temperatures during the last 100 kyears -- click for larger version

If we assume a scenario under which atmospheric CO2 concentration was constant for a long time (presumably at pre-industrial level, let's say at 280 ppmv) then started to increase exponentially at the rate observed, we get an excitation that is 0 for dates before 1934 and is α(t-1934) for t > 1934. The artificial sharp transition introduced in 1934 this way does not have much effect on temperature response at later dates.

If global climate responds to an excitation as a first order linear system with relaxation time τ, rate of global average temperature change is αλ(1-e-(t-1934)/τ) for a date t after 1934. As the expression in parentheses is smaller than 1, this rate can't possibly exceed αλ = 0.018°C/year. It means global average temperature can't increase at a rate more than 1.8°C/century even in 2100, which means less than 1.6°C increase relative to current global average temperature, no matter how small τ is supposed to be.

However, a short relaxation time is unlikely, because it takes (much) time to heat up the oceans due to their huge thermal inertia.

For example if τ = 500, current rate of change due to CO2 forcing is 0.26°C/century while in 2100 it is 0.51°C/century (according to Hansen & Sato, of course). However, ocean turnover time being several millennia, we have probably overestimated the actual rates. It means that most of the warming observed during the last few decades is due to internal noise of the climate system, not CO2.

Anyway, the exponential increase of CO2 itself can't go on forever simply because technology is changing all the time on its own, even with no government intervention whatsoever. Therefore it should follow a logistic curve. If the epoch of CO2 increase is substantially shorter than the relaxation time of the climate system, the peak rate of change due to CO2 becomes negligible.

Comments