The Debunking Handbook 2020: Prevent misinformation from sticking if you can

Posted on 20 October 2020 by John Cook, BaerbelW

This blog post is part 2 of a series of excerpts from The Debunking Handbook 2020 which can be downloaded here. The list of references is available here.

Prevent misinformation from sticking if you can

![]() Because misinformation is sticky, it’s best preempted. This can be achieved by explaining misleading or manipulative argumentation strategies to people—a technique known as “inoculation” that makes people resilient to subsequent manipulation attempts. A potential drawback of inoculation is that it requires advance knowledge of misinformation techniques and is best administered before people are exposed to the misinformation.

Because misinformation is sticky, it’s best preempted. This can be achieved by explaining misleading or manipulative argumentation strategies to people—a technique known as “inoculation” that makes people resilient to subsequent manipulation attempts. A potential drawback of inoculation is that it requires advance knowledge of misinformation techniques and is best administered before people are exposed to the misinformation.

As misinformation is hard to dislodge, preventing it from taking root in the first place is one fruitful strategy. Several prevention strategies are known to be effective.

Simply warning people that they might be misinformed can reduce later reliance on misinformation 27, 78. Even general warnings (“the media sometimes does not check facts before publishing information that turns out to be inaccurate”) can make people more receptive to later corrections. Specific warnings that content may be false have been shown to reduce the likelihood that people will share the information online 28.

The process of inoculation or “prebunking” includes a forewarning as well as a preemptive refutation and follows the biomedical analogy 29. By exposing people to a severely weakened dose of the techniques used in misinformation (and by preemptively refuting them), “cognitive antibodies” can be cultivated. For example, by explaining to people how the tobacco industry rolled out “fake experts” in the 1960s to create a chimerical scientific “debate” about the harms from smoking, people become more resistant to subsequent persuasion attempts using the same misleading argumentation in the context of climate change 30.

The effectiveness of inoculation has been shown repeatedly and across many different topics 30, 31, 32, 33, 34. Recently, it has been shown that inoculation can be scaled up through engaging multimedia applications, such as cartoons 35 and games 36, 37.

Simple steps to greater media literacy

Simply encouraging people to critically evaluate information as they read it can reduce the likelihood of taking in inaccurate information 38 or help people become more discerning in their sharing behavior 39.

Educating readers about specific strategies to aid in this critical evaluation can help people develop important habits. Such strategies include: Taking a “buyer beware” stance towards all information on social media; slowing down and thinking about the information provided, evaluating its plausibility in light of alternatives 40, 41; always considering information sources, including their track record, their expertise, and their motives 42; and verifying claims (e.g., through “lateral reading” 43) before sharing them 44. Lateral reading means to check other sources to evaluate the credibility of a website rather than trying to analyse the site itself. Many tools and suggestions for enhancing digital literacy exist 45.

You cannot assume that people spontaneously engage in such behaviours 39. People do not routinely track, evaluate, or use the credibility of sources in their judgments 10. However, when they do, the impact of misinformation from less-credible sources can be reduced.

The strategic landscape of debunking

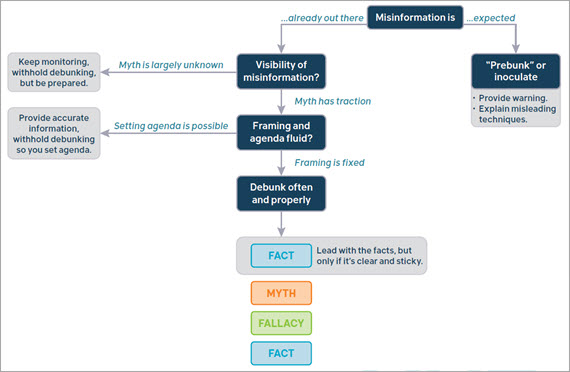

If you are unable to prevent misinformation from sticking, then you have another arrow in your quiver: Debunking! However, you should first think about a few things before you start debunking.

Everyone has limited time and resources, so you need to pick your battles. If a myth is not spreading widely, or does not have the potential to cause harm now or in the future, there may be no point in debunking it. Your efforts may be better invested elsewhere, and the less said about an unknown myth the better.

Corrections have to point to the misinformation so they necessarily raise its familiarity. However, hearing about misinformation in a correction does little damage, even if the correction introduces a myth that people have never heard of before 46. Nonetheless, one should be mindful not to give undue exposure to fringe opinion and conspiracy claims through a correction. If no one has heard of the myth that earwax can dissolve concrete, why correct it in public?

Debunkers should also be mindful that any correction necessarily reinforces a rhetorical frame (i.e., a set of “talking points”) created by someone else. You cannot correct someone else’s myth without talking about it. In that sense, any correction—even if successful—can have unintended consequences, and choosing one’s own frame may be more beneficial. For example, highlighting the enormous success and safety of a vaccine might create a more positive set of talking points than debunking a vaccine-related myth 47. And they are your talking points, not someone else’s.

Who should debunk?

Successful communication rests on the communicator’s credibility.

Information from sources that are perceived to be credible typically creates stronger beliefs 48 and is more persuasive 49, 50. By and large, this also holds for misinformation 51, 52, 53. However, credibility may have limited effects when people pay little attention to the source 54, 55, or when the sources are news outlets rather than people 56, 57.

Source credibility also matters for corrections of misinformation, although perhaps to a lesser extent 51, 53. When breaking down credibility into trustworthiness and expertise, perceived trustworthiness of a debunking source may matter more than its perceived expertise 58, 59. Sources with high credibility on both dimensions (e.g., health professionals or trusted health organizations) may be ideal choices 60, 61, 62.

It is worth keeping in mind that the credibility of a source will matter more to some groups than others, depending on content and context 60, 63. For example, people with negative attitudes toward vaccines distrust formal sources of vaccine-related information (including generally-trusted health organizations) 64.

Tailor the message to the audience and use a messenger trusted by the target group 65. Discredit disinformation sources that have vested interests 53.

To learn more, continue with The elusive backfire effects

Arguments

Arguments

Comments