No, a cherry-picked analysis doesn’t demonstrate that we’re not in a climate crisis

Posted on 7 October 2022 by Ken Rice

A group of Italian scientists recently published a paper in which they critically assessed extreme [weather] event trends. This has received quite a lot of attention amongst some “skeptics” since it concludes that

…on the basis of observational data, the climate crisis that, according to many sources, we are experiencing today, is not evident yet.

Before addressing what was presented in this paper, it’s worth making some general comments. Not only is there overwhelming agreement that humans are causing global warming (Cook et al. 2013; Cook et al. 2016), the latest IPCC report went so far as to say that it is now unequivocal that human influence has warmed the atmosphere, ocean and land since pre-industrial times (Eyring et al. 2021). However, whether or not this implies a climate crisis, or a climate emergency, is a judgement that cannot be decided by a scientific analysis alone.

What’s also become clear is that stopping global warming, and the associated changes to the climate, will require getting human emissions of greenhouse gases, mainly carbon dioxide, to (net) zero (MacDougall et al. 2020). Hence, a judgement of whether or not we’re in a climate crisis should not really depend only on an assessment of the human influence to date, but also on an assessment of what could happen in the future and what might be required so as to limit how much more is emitted before reaching (net) zero.

The paper also focussed primarily on what is referred to as Detection and Attribution. This is a two-step process in which a change in some climatic variable is first detected, and then an analysis is carried out to assess if that change can be attributed to an anthropogenic influence. However, as this recent Realclimate article highlights, attribution doesn’t necessarily require first detecting some change. It is possible to determine an anthropogenic influence for an individual extreme event, and there are now many examples of extreme events that have been linked to human-caused climate change.

Additionally, the paper only considered 5 types of extreme events, ignoring that there are many other potential impacts of climate change. For example sea level rise, changing weather patterns, wildfires, ocean acidification, impacts on ecosystems, and even the possibility of compound events. As Friederike Otto points out in this article, they don’t even consider heatwaves, “where the observed trends are so incredibly obvious.” In fact, in the same article, the experts who were quoted regarded the cherry-picking and manipulation as so egregious that some called for the paper to be retracted.

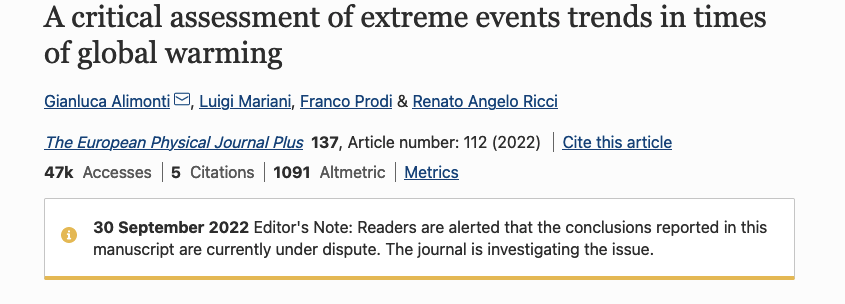

These criticisms have now been acknowledged by the Journal, which has added an Editor's note to the paper saying "Readers are alerted that the conclusions reported in this manuscript are currently under dispute. The journal is investigating the issue."

The authors of the paper also submitted their paper four weeks after the release of the Intergovernmental Panel on Climate Change’s (IPCC) AR6 WGI report. The paper does mention this report, but then largely ignores what it presents. For example, they failed to note that it is stated in the Summary for Policymakers that “Human-induced climate change is already affecting many weather and climate extremes in every region across the globe. Evidence of observed changes in extremes such as heatwaves, heavy precipitation, droughts, and tropical cyclones, and, in particular, their attribution to human influence, has strengthened since AR5“.

The first type of extreme event considered in the paper is hurricanes, often referred to as tropical cyclones (TCs). There is still a lot of debate about whether or not there has been detectable change in TC activity that can be attributed to a human influence (Knutson et al. 2019). Some work does indeed detect an increase in TC intensity over the last few decades (Kossins et al. 2020), which is consistent with what would be expected in a warming world (Emanuel 2020). Other work, however, suggests that - in some cases - the trends are a consequence of observational biases, rather than being real (Vecchi et al. 2021). However, even the latter paper concludes that “climate variability and aerosol-induced mid-to-late-20th century major hurricane frequency reductions have probably masked century-scale greenhouse-gas warming contributions to North Atlantic major hurricane frequency.”

One complication with attributing a human influence to TCs is that human-induced warming is expected to reduce the total number of TCs, but cause an increase in the frequency and intensity of the strongest ones. Consequently, metrics that consider all TCs can show little in the way of trends, even if there has been an anthropogenic influence (Kang & Elsner 2016). If one considers the strongest TCs, then there are indeed indications that the strongest ones are getting more frequent.

Additionally, there are other ways in which TCs can be influenced by human-driven warming. Global warming could lead to an increase in the frequency of rapid intensification events (Emanuel 2017) and a poleward migration of the latitude of maximum TC intensity (Kossins et al. 2016). Sea level rise, which can definitively be attributed to human-driven warming, will also lead to enhanced storm surge. Similarly, a warmer atmosphere can hold more water vapour, intensifying the precipitation associated with a TC and increasing the risk of severe flooding.

So, even if there is still debate about whether or not there is an attributable trend in TC activity, there are many others ways to assess an anthropogenic influence, and there is now plenty of evidence that TCs have already been influenced by human-caused warming, and little doubt that this will continue as humans continue to emit greenhouse gases into the atmosphere.

The paper also looked at droughts and floods, tornadoes, extreme precipitation events, and global greening and agricultural production. Droughts are complex phenomena that are not easy to study, but there is clear evidence that climate change is making drought worse, and there is evidence that anthropogenic influences are impacting global drought frequency, duration, and intensity (Chiang et al. 2021). Similarly, both observations and models indicate that total precipitation from intense precipitation events doubles for each degree of global warming (Myhre et al. 2019). The latest IPCC WG1 report says “The frequency and intensity of heavy precipitation events have increased since the 1950s over most land area for which observational data are sufficient for trend analysis (high confidence), and human-induced climate change is likely the main driver”.

On the other hand, our current understanding of how global warming might influence tornadoes is so uncertain that we don’t really know if there is a link between human-induced climate change and tornadoes. It’s certainly the case that CO2 from human emissions has led to global greening and has influenced agricultural production. However, there are already signs that nutrient constraints and water stress is counteracting this effect. For example, drought and tree mortality in the tropics is offsetting enhanced plant productivity in the Arctic.

Clearly, analysing how human-driven global warming is influencing extreme events is very challenging and there is still ongoing debate about the link between climate change and these extreme events. However, there is clear evidence that human-induced climate change is already impacting many extreme events. The link will almost certainly become clearer with time and the expectation is that climate change will make many extreme events more intense and more frequent.

This understanding isn't challenged by a simplistic, and selective, analysis that ignores many other lines of evidence that demonstrate a link between extreme events and human-caused warming. Also, as mentioned above, we cannot determine if we’re in a climate crisis, or not, using a scientific analysis alone. It requires a judgement about the impacts to date, the potential future impacts, and what we might need to do to limit the overall impact. If the authors feel that the label 'climate crisis' is not appropriate, then they are making a subjective choice that - today - is at odds with the views of many other experts, organisations, and even some governments.

Notes:

This post was drafted by Ken Rice, with help from @TheDisproof, who has been very active on Twitter debunking various climate myths.

Arguments

Arguments

I am sure that a rigorous accurate detailed evaluation of water use in the Colorado Basin could also conclude that there is no evidence of a crisis or emegency.

Only a few users of water have been getting less than they want ... so far.

...just as a rigorous, thorough medical examinaton of a person falling off a high-rise building could say "no signs of any harm yet" - as long as they finished before the person reached the ground.

If the authors of this paper find no statistical evidence of climate change on weather events, it seems incumbent on them to posit a reason.

From the conclusions section: "It would be nevertheless extremely important to define mitigation and adaptation strategies that take into account current trends." This seems reasonable except for two things:

1) the authors are saying the current trends are indistinguishable from zero.

2) Even if nonzero, nobody expects 'current trends' to remain current for long, in an exponential phenomenon.

You can't look at what is happening and conclude anything else: that we're in the midst of something best explained by the exponential function. Which is also used to describe things that are exploding.

After sea level rises 3 feet, it's easy to say we should have done something. But the actual moment to do something is when you jump off the cliff, not when you hit bottom (btw, your trajectory after jumping off a cliff is also best described by the exponential function).

To pick a nit, I think your trajectory after jumping off a cliff is best described by an elliptical function, with the centre of the earth as one of the focii. You are launching yourself into orbit - albeit a short one once the earth gets in the way.

Next best approximation is a parabola, and that probably fits an exponential increase in vertical speed to a pretty high accuracy.

The one thing it definitely is not, is linear. That very rapidly becomes obvious.

But then, the contrarian industry has long had a habit of trying to force reality to fit their beliefs - e.g. the infamous North Carolina effort to declare that sea level was only allowed to change based on a linear extrapolation of past readings.

Sea level rise appears to be following a quadratic (parabolic) curve. Perhaps this is not surprising because steadly increasing and accumulating CO2 levels in the atmophere and known positive feedbacks causing the warming trend, would be consistent with a parabolic function, and not so much a linear or exponential function. But if antarctic ice sheets physically destabilise that could be a local exponential function.

Clausius-Clapeyron means that with global warming the atmosphere can hold more water vapor. Whether it does hold more water vapor depends on a surprisingly complex set of factors. Drought intensification is a simpler case. C-C means there almost always be more evaporation and will often (not always) be more transpiration.

Short term rainfall is a much more complex case. I have found no trend in US 1 hour to 6 hour rainfalls in 500+ stations over 70 years. However as rainfalls get progressively longer (6 instead of 1) there are consistently more increasing trends and fewer declining trends. That's also why we almost never see a new 1 or 5 or 10 minute rainfall record. C-C has essentially zero influence at the very short term. But C-C definitely has more influence at the longer durations which is why we see new 24 hour (and longer) all-time records being set like with Harvey. But Harvey brings up an important point, the water vapor has to come from somewhere and it came a warmer gulf of Mexico. That much is quite obvious.

There are changes in regional tornado climatology. I have yet to do that analysis and it will be a bit difficult with relatively rare events. But overall in the US EF-3 and EF-4 tornadoes are declining, EF-5 are statistically flat. There are flat statistics for winter and generally declining statistics for warmer months, but fewer declines 1990-present than 1950-present. In short, complicated and dependent on time periods affected by natural cycles. Easy to cherry pick.

My Atlantic hurricane analysis found a notable uptick in >= 120 knot storms. I set that threshold to avoid the small numbers problems using categories. RI is interesting, someone needs to write a paper with a new definition because the old definition (>= 30 knots increase in 24 hours) is true for 60% of Atlantic hurricanes. The increase in RI from warmer oceans shows up most strongly at >= 40 knots in 24 hours. Ian met that threshold. But there's also an increase in rapid weakening over water. Over land weakening is expected and I exclude all such cases. Globally Ryan Maue's data shows fewer hurricanes but the average hurricane in stronger. Also have to be careful not to cherry pick intervals.

Overall I agree with the article above that there are many other impacts that need to be considered. That's particularly true if we are going to decide how to rationally spend money on impact mitigation.

Eric:

What source of rainfall data did you use in your U.S. analysis? How did you determine the frequencies?

Analsysis of rainfall data suffers from three major complications:

The solution to these problems involves looking at many stations over a region, and fitting statistical distributions to the records. The statistical distributions are then used to derive estimates of 5-minute, 1-hour, 6-hour, etc. duration extreme events.

There is also rainfall data available from radar systems. An example of a system that incorporates station precipitation gauge values with radar and model estimates can be seen here:

https://centreau.org/en/events-news/events/introduction-to-the-canadian-precipitation-analysis-capa/

Eric,

I suspect that a simple 'tornado count' of each intensity level is not the best measure of tornado activity. A better measure would be the sum of the length of tornado impact, either in time or physical distance travelled, for each intensity level.

That probably also applies to cyclones. The total duration or distance of each level of intensity would be more meaningful than a simple count. And, of course, the measure has to be of all cyclones, not just the Atlantic ones called hurricanes, and definitely not just the cyclones that make landfall on USA territory. And Tropical Storm level cyclones also need to be part of the evaluation, especially the magnitude of rain fall from them.

Bob, there were 577 stations with reasonable coverage since 1950. There were more stations with sparse coverage which I ignored. I also ignored stations with < 70 years of coverage. They all start with USC and USW, then a station number. The data is described and available here Cooperative Observer Network (COOP). While it doesn't mean there is scientific value to the data, I certainly appreciate the efforts of thousands of observers manually entering data every hour or more often in some cases, and others who transcribed it.

As we've discussed before you believe the way to analyze global warming influences is to look at changes in the distribution over time. I prefer to leave out most of the data for rainfall since I am only interested in one thing: the maximum amount of rain in the interval annually (and annually by month). Why I want that trend is simple, that amount is what creates the largest runoff. I fully agree that distributions will show changes skewing in various ways to higher amounts of rainfall in some subset of events determined to be extreme.

In many cases they will use the top 0.1% of events. But with roughly 100 rainfall events per year, that's just one event per 10 years. However they can look at numerous stations over a region (as few as 10) to get the same number of data points as I use.

One Planet, I agree. Counts are only a subset of available data. The data includes TOR_F_SCALE, TOR_LENGTH, TOR_WIDTH, property damage estimates, and a variety of text. Not all events will have all the fields and the text varies greatly. But a careful analysis would use as much as possible. I would also look more thoroughly for seasonal changes because there are some (November increases in particular) even if annual counts are down. The tornado data also comes from the NCEI (formerly NCDC). Storm Events Database

Hurricane data is IBTRACS from here International Best Track Archive for Climate Stewardship (IBTrACS)

Eric:

From the first paragraph of the link to the COOP web page you provide (emphasis added):

Next question:

How did your analysis determine 1-hour and 6-hour totals from that data?

Hint: the COOP network involves manual reading of data. Temperature from a max/min thermometer (once per day), and precipitation total from a rain gauge that sits and collects rainfall for 24 hours, and is emptied manually and the quantity measured (once per day).

Side note: this is the network that requires the time of day adjustment for temperature trends.

Bob, here's an example of one of the files I used www.ncei.noaa.gov/data/coop-hourly-precipitation/v2/access/USC00010957.csv. for Boaz, AL. It is a daily report but it contains hourly amounts, IIRC hundredths of an inch as an integer. I got to the list of stations using a search: www.ncei.noaa.gov/access/search/data-search/coop-hourly-precipitation?dataTypes=HR00Val. Sorry my link above was not the hourly precipitation search result that I intended to show.

Yes, I am careful using temperature readings from sources like that where they typically had late afternoon readings which easily double counted high temperatures in the decades before the 1960's or 1970's (cutover to electronic or different ToD varies by station).

Eric. Thank you for the updated links.

In your second link, which allows searching for stations, the top title is a link to this web page that give an indication of the instrumentation that is used to collect this data. On that page we see (emphasis added):

The Fischer-Porter is a weighing-type automated precipitation gauge. You can read a little bit about it here. Old data will have been on the paper coded tapes described in that link, but a lot of more recent data (last 30 years) will have been "modified for remote transmission" (interpretation: modified for electronic readouts).

Weighing gauges in general are poor at determining small amounts of precipitation over short intervals. The noise characteristics are not good. The gauge just tells you "this is how much weight I have now", and you need to process that into a change in weight over time to determine precipitation amounts. That can be done externally using the raw weights, but modern gauges may have internal electronics that will do the processing - for better or for worse. You have a classic "signal to noise" ratio problem with small changes.

Weighing gauges should be more reliable for heavier rainfall amounts, but they are still a limiting technology. There are many other brands of weighing gauges, too - Geonor, Pluvio and Pluvio2 are ones that I have worked with. They are generally better at cumulative rainfall estimates over longer periods of time. (One of their advantages is that they collect snow as well as rain.)

Short term rainfall intensity data are more commonly collected using tipping bucket technology, which can provide one-minute rainfall intensity data. Tipping buckets have problems at high rainfall rates, and are not so good for long-term cumulative amounts, so many automated stations (virtually all at Canadian automated stations) will have both types.

Intensity-Duration-Frequency curves are a standard part of precipitation analysis. They are needed for engineering design (drainage design) and are useful for many hydrological and ecological purposes. You can read more about the Canadian methodology and results by following the links on this page.

Any precipitation gauge will have issues with "capture efficiency" at high winds. Winds cause turbulence around the gauge, which general causes the gauge to under-collect. Much more important for snow, but still a factor with rain. Most automated weighing gauges will be installed with some sort of wind shield to help with this. Tipping buckets are usualy mounted close to the surface, where wind is less of a factor. Getting data that have been adjusted for wind capture efficiency is often very difficult.

Changes in instrumentation (which automated gauge, what wind shielding, how the data are processed) will be inportant in looking at trends.

And none of that helps much with the problems of localized storms passing between recording stations.

Thanks Bob. Your information brought to mind an old link I saved: wmo.asu.edu/content/world-greatest-one-minute-rainfall. The weighing gauge pen jumped 1.34 inches in less than a minute according to that summary. When I thought about the motor pulling paper from the spool I thought what if the motor stops, then restarts? Then the trace would show an artificial jump. Presumably they analyzed the 50 minute interval to determine that the motor didn't have any hiccups, that the paper didn't bind, etc. Also I'm not sure if the motor is turned on and off to move the paper each minute or if it is always on and geared down to move the paper very slowly.

In any case it brings up another point about the short duration rainfalls. Tipping bucket gauges have to be read and ASOS reads every minute. However I believe they only send cumulative amounts at 5 or 15 minute intervals. That may vary and they may or may not retain the one minute readings internally. In any case to beat the world's one minute rainfall record we need one minute resolution.

I have a Rainwise tipping bucket gauge and with an 8 inch diameter I consider it barely adequate for rainfall accuracy (I stand out in the rain to check it against my Cocorahs guage). There are many smaller diameter buckets on the market and I would consider them potentially inaccurate. So while there may be more collection points now they may not be accurate. The second problem is time resolution. I collect measurements once a minute and save them. The measurements fall off the queue after about a day. I could save them permanently but if there's an extreme rainfall I copy the data before I lose the measurements.

The bottom line is that it may be difficult to beat old records made by weighing gauges simply because technology has gotten cheaper and less accurate (IMO).

The lead article is also duplicated on ATTP (andthentheresphysics.wordpress.com)

of October 7, 2022.

More than 100 responses at ATTP ~ for those readers with an idle hour, seeking entertainment.

Lots of good comments: from Bob Loblaw, Dikranmarsupial, as well as the deft Willard, and others.

[BL] The direct link to the post at ATTP is this.

Eric:

Ahhh, you're familiar with and participate in CoCoRaHS. That's good. That is an important volunteer network that helps fill in a lot of gaps in the North American precipitation monitoring network.

The 1-minute record precipitation value you link to is interesting. The paper chart system used in that measurement is very simlar to what you see in this Wikipedia image of a thermo-hygrograph:

The paper is mounted on a drum that rotates on a clock mechanism. The measurement system controls a pen that moves up and down. Since the pen follows an arc, the lines of equal time on the chart are curved. In the case of the Fischer-Porter precipitation gauge, full travel covers 6 inches of precipitation - but the mechanism is double-jointed: you get 0-6" on an upward arc, then 6-12" on a downward arc. In the chart image on your link, you can see the 1,2,3,4,5 - 7,8,9,10,11 markings on the left-most chart. Quite the mechanical design!

The link that I gave in comment #12 has further details on the recording of precipitation from the US Fischer-Porter network, including a mention of the 15-minute measurements. Although they talk of a "Fischer-Porter" network, most of the automated systems in the US have been using the Geonor T-200 gauges for quite a long time. MSC also makes extensive use of those gauges, but is replacing them with Ott Puvio2 gauges. Fischer-Porter also morphed into Belfort (which still makes gauges), so you'll see that name commonly, too.

The US and Canada have been moving to more frequent readings than 15-minutes, but as I mentioned the character of the gauges is that the noise makes it very hard to detect small precipitation amounts.

Here are a few references to processing of data from the US network:

As for tipping buckets: at least in Canada they do collect data at one-minute intervals, although that data is not automatically visible to the general public. It is used in the IDF curve analysis I linked to earlier.

Although all of this precipitation gauge discussion may seem to be getting off-topic, I think it gives an interesting perspective in the gory details of weather observations and the things that need to be considered in processing "raw" measurements for trend analysis.

The OP points out that the paper in question has cherry-picked a few analyses that failed to cover a lot of what has been looked at in the literature. Often, proper analysis of weather data needs to understand the intricacies of the measurements - how instruments and processing change over time, the strengths and weaknesses of different measurement technologies, etc. Is the measurement system in question capable of extracting the signal that the analysis is looking for?

If the analysis fails to understand exactly what the measurements represent, and treats a long time series of varying instruments and processing methods as if each reading is 100% reliable, then the analysis will be misleading - possibly misleading the person doing the analysis, let alone the reader.

Caveat emptor.

Hi Bob, Just one note: I have the Cocorahs gauge and bought several more for friends. I don't participate yet because I am away too much right now to take daily readings. When that situation changes, I will start doing that.

I will read through those references about the instruments, recording and processing, thanks.

I just took a look at the CoCoRaHS site and its link to where you can buy the precipitation gauge. It is very similar to the "Type B" gauge that used to be the standard across Canada for manual rain gauges. I've emptied a few of those over the years....

You can read more about Canada's manual precipitation standards (including a picture of the Type B) at this link.

Bob,

I like your detailed posts describing how weather data are collected, analized and corrected.

I'm not personally that into the collection of weather records as such, but I totally agree Bob Loblows posts were detailed, and in my view high quality. I was thinking myself that the excange between Bob and Eric was a model of how things should be done, with an emphasis on facts, and free of insults and crank science. So unlike a certain other largely unmoderated climate website. Sigh

Noted in a couple of other threads, but worth repeating here.

This paper has been retracted. Further details available in several places:

Why wasn't this paper cycled though PubPeer.com for post peer review? The authors disagreed with the retraction, and that may have given them a chance to air their grievances. Don't have to wait for Festivus Day.

Paul @ 22:

Good question. PubPeer can be a useful method of providing further review of a published article. It requires that someone start the discussion - you, for example, started one on an earlier Pat Frank paper, as you noted at ATTP's blog. Authors of the paper may not participate, though, and sometimes the discussions at PubPeer descend into flame wars that make a Boy Scout wiener roast look innocent (for the wiener).

[Note: I see you posted today at ATTP's that someone has started a PubPeer review.]

I debated starting one over the recent Pat Frank paper discussed here. but your experience with the earlier Pat Frank paper made me feel that it would likely be a waste of time.

There have been other "contrarian" papers that have been handled by either writing to the journal or submitting an official comment to the journal, but not all journals are interested in publishing comments.

Springer has retracted this paper, with only a short note as to why. We do not see the detailed nature of the complaints, what was said in post-publication review, or what the authors said in response. Just the opinion that "...the addendum was not suitable for publication and that the conclusions of the article were not supported by available evidence or data provided by the authors" and the conclusion that "...the Editors-in-Chief no longer have confidence in the results and conclusions reported in this article."

A lot of speculation can be read between the lines of the Springer retraction notice. Sometimes, such reviews can end up with papers being retracted, editors being removed, or even a publisher shutting down a journal (cf. Pattern Recognition in Physics).

Springer has not made the paper "disappear". It is still available on the web page, but marked as retracted. It's just that Springer has put a huge "caveat emptor" on the contents.