Lessons from the Whitehouse-Annan Wager

Posted on 18 January 2012 by dana1981

In 2008, David Whitehouse (former BBC science correspondent with an astrophysics doctorate) made a wager with James Annan (climate scientist and statistics expert) involving global temperature data. Whitehouse wagered that the temperature data from the British Hadley Centre and University of East Anglia (HadCRUT) would not break its record high annual global temperature, which at the time was set in 1998, by 2011. The BBC, which coordinated the bet, recently declared Whitehouse the winner, although as we will see below, the true outcome is not entirely clear.

Predictably, particularly given the extremely poor performance of climate "skeptics" when it comes to climate predictions, the usual climate denial enablers are trumpeting the Whitehouse "victory" far and wide.

However, the stars had to align for Whitehouse to have a chance to win this bet. 2005 and 2010 were hotter than 1998 in the two other major surface temperature data sets, and likely will be in the soon-to-be updated HadCRUT data as well. The current HadCRUT data (HadCRUT3) has a known cool bias because it excludes several large regions which lack temperature station coverage, and also happen to be warming quite rapidly (such as the Arctic). Additionally, short-term natural effects dampened human-caused global warming over much of the 2008-2011 period. Despite this fact, the long-term human-caused global warming trend continues ever upward underneath that short-term natural noise.

More important than the winner of the bet is what we can learn from it. The main lesson here is that short-term temperature changes are quite unpredictable, as natural effects can overwhelm the steady greenhouse gas-caused warming over short timeframes.

It Begins

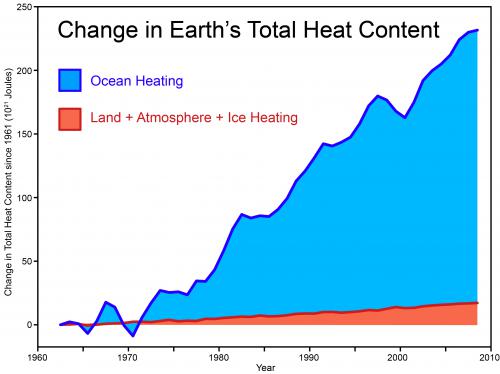

Our story begins in December of 2007, when Whitehouse penned an article for New Statesman in which he repeated the myth of no global warming since 1998 several times. This argument has two glaring fundamental flaws. First, most global warming is going into the oceans, not the air, and the rise in the Earth's total heat content has not abated.

Figure 1: Total global heat content, data from Church et al. (2011)

Secondly, ten years is too short of a timeframe to determine if the warming has stopped anyway, because short-term noise can easily overwhelm the long-term global warming signal over such short timeframes, as happens on a regular basis (Figure 2).

Figure 2: BEST land-only surface temperature data (green) with linear trends applied to the timeframes 1973 to 1980, 1980 to 1988, 1988 to 1995, 1995 to 2001, 1998 to 2005, 2002 to 2010 (blue), and 1973 to 2010 (red). Hat-tip to Skeptical Science contributor Sphaerica for identifying all of these "cooling trends."

To his credit, in his 2007 article Whitehouse acknowledged that short-term effects may have been the cause of the temporarily slowed warming of global surface temperatures. Unfortunately he also posited a second, wholly unsupported possible explanation:

"we are led to the conclusion that either the hypothesis of carbon dioxide induced global warming holds but its effects are being modified in what seems to be an improbable though not impossible way, or, and this really is heresy according to some, the working hypothesis does not stand the test of data."

It's not improbable that short-term noise could dampen the long-term global warming signal. In fact, as Figure 2 shows, it happens quite frequently, but the steady rise of human-caused global warming always wins out in the end.

Whitehouse failed to explain exactly how or why the human-caused global warming theory would fail to "stand the test of data," and the proposition is a strange one, since Whitehouse also admits that rising greenhouse gases will undeniably cause the planet to warm.

A number of climate scientists took issue with the "warming has stopped" myth put forth by Whitehouse and other climate "skeptics," and they were debunked by Gavin Schmidt and Stefan Rahmstorf at RealClimate, and by James Annan on his blog, among others.

The BBC program More or Less set up a wager between Annan and Whitehouse for £100 that, according to HadCRUT, there would be no new record set by 2011. Being a statistics expert, Annan ran the numbers and estimated the odds of a record by 2011 at 87.5%, so he accepted the bet.

Annan's Science vs. Whitehouse's Gut

The basis of Annan's calculations is a simple one - greenhouse gas emissions are rising at a steady rate, and this rise is currently causing approximately 0.02°C warming of the global surface temperatures every year. The challenge is that this human-caused warming can easily be overwhelmed by natural effects over short timeframes. For example, a strong El Niño or La Niña can have a 0.2°C warming or cooling effect, respectively, on global temperatures for a given year.

However, over the long term the temperature effects of the El Niños and La Niñas offset each other, while the steady human-caused warming trend continues to rise. Annan's logic was that between 2008 and 2011 there would be a year in which the short-term natural effects aligned to amplify the human-caused warming trend, leading to a record hot year.

The basis for Whitehouse's end of the wager was on much shakier scientific footing:

"Looking at HadCrut3 it is clear that there isn’t much of an increase in the 1980s, more of an increase in the 1990s, then there is the big 1998 El Nino, followed by no increase in the past decade or so. It therefore seemed far more likely that the temperature would continue what it had been doing in the recent past than revert to an upward trend, in the next few years at least."

In short, Whitehouse bet that whatever had caused the temporary slowdown in surface warming would continue, despite the fact that he apparently did not comprehend its causes. However, Whitehouse was fortunate in that the short-term cooling effects did indeed continue to impact global temperatures.

Mother Nature Intervenes

The main influences on global temperatures are

- human greenhouse gas emissions

- human aerosol emissions (another byproduct of fossil fuel combustion which blocks sunlight, causing cooling)

- the El Niño Southern Oscillation (ENSO; El Niños and La Niñas)

- solar activity

- volcanic activity

Human nature did not help Annan's case either, as human aerosol emissions appear to have increased since 2000, offsetting some of the greenhouse gas-caused warming. But Mother Nature certainly did not work in Annan's favor over the 2008-2011 timeframe.

Solar activity is relatively stable, and thus tends to have a relatively small impact on global temperature changes. However, 2008-2010 was in the midst of the longest solar cycle minimum in a century, which had a cooling effect on global temperatures over that period, working in Whitehouse's favor.

Additionally, 2008 and 2011 were both influenced by strong La Niñas. In fact in 2011, La Niña had the fifth-strongest cooling effect on any year since 1950, and nevertheless was the hottest La Niña year on record, according to the World Meteorological Association. 2009 and 2010 both saw relatively moderate ENSO conditions, and thus were Annan's only real chances of winning the wager. Neither quite broke the 1998 record in HadCRUT3. However, HadCRUT was a rather poor choice of data sets on which to base this wager.

HadCRUT3 Cool Bias

At the end of 2009 (too late to influence the Whitehouse-Annan wager), an analysis by the European Centre for Medium-Range Weather Forecasts (ECMWF) determined that the HadCRUT3 data is biased on the cool side:

"The new analysis estimates the warming to be higher than that shown from HadCRUT's more limited direct observations. This is because HadCRUT is sampling regions that have exhibited less change, on average, than the entire globe over this particular period."

As ECMWF notes, the main problem is that HadCRUT3 lacks temperature station coverage in areas like the Arctic and north and central Africa (Figure 3), where the other data sets (which use different methods to extrapolate for the areas which lack coverage) show these are some of the most rapidly-warming areas on Earth.

Figure 3: HadCRUT station coverage and temperature anomalies. Note the lack of coverage at the poles and portions of Africa.

The conclusion that HadCRUT3 data has a cool bias was subsequently supported by the Berkeley Earth Temperature Station (BEST) project, which conducted an independent analysis of global surface temperature data. The BEST results were in good agreement with estimates by the NASA Goddard Institute for Space Studies (GISS) and National Oceanic and Atmospheric Administration (NOAA), but showed more warming than HadCRUT3 data, particularly since 2000 (Figure 4).

Figure 4: The decadal land-surface average temperature from BEST using a 10-year moving average of surface temperatures over land. Anomalies are relative to the Jan 1950 - December 1979 mean. The grey band indicates 95% statistical and spatial uncertainty interval.

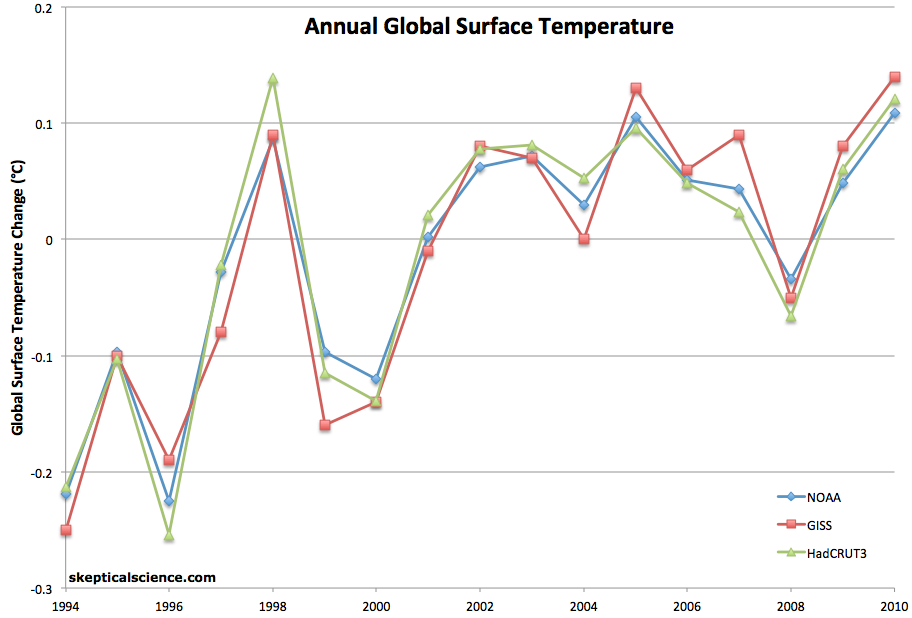

In fact, the wager would not have worked if Whitehouse and Annan had used GISS or NOAA data, because in both of those data sets, 2005 had already exceeded the 1998 temperature record. Additionally, in both data sets, 2010 was statistically tied with 2005 as the hottest year on record (Figure 5). Thus, as Annan noted in a follow-up story on the wager with More or Less, he arguably would have won the wager using either NOAA or GISS data.

Figure 5: NOAA (blue), GISS (red), and HadCRUT3 (green) annual average global surface temperature anomalies, with 1995-2010 baseline. 2010 and 2005 are the two hottest years on record in NOAA and GISS data.

A HadCRUT Update is Forthcoming

Hadley and U. of East Anglia are currently in the process of updating their data set to include additional Russian and Arctic temperature data, amongst other revisions. It appers that consistent with NOAA and GISS, 2005 and 2010 temperatures will exceed 1998 in the resulting HadCRUT4 data set.

Thus it appears that in 2008, the peak of 1998 had already been exceeded in every major surface temperature data set, including HadCRUT, once the HadCRUT4 results are finalized. Subsequent to 2005, it appears that as with the NOAA and GISS data, 2010 will be statistically tied with 2005 as the hottest year on record in HadCRUT4.

However, the long-term trend is more important than individual record years, and despite the short-term dampening of global surface warming, the underlying, steady march greenhouse gas warming continues, as demonstrated by Foster and Rahmstorf.

Foster and Rahmstorf Confirm Annan's Premise

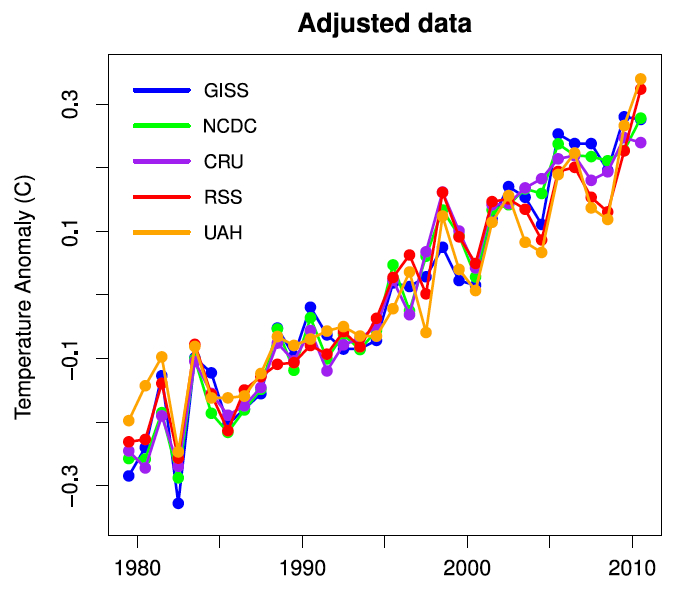

Foster and Rahmstorf (2011) sought to identify the underlying global warming trend by filtering out the effects of solar and volcanic activity and ENSO using a statistical multiple linear regression technique. They found that in every single data set, once these short-term natural effects are removed, 2009 and 2010 were the two hottest years on record (Figure 6), and that the global warming trend has remained remarkably steady underneath that short-term natural noise.

Figure 6: Annual averages of the surface temperature data with the effects of solar and volcanic activity and ENSO removed by Foster and Rahmstorf (2011)

Global Warming Continues

Most importantly, we shouldn't allow this bet to distract us from the scientific evidence. As shown above, regardless of our wagers and wishes, the planet continues to warm. While statistically speaking, a new record will inevitably occur over the next few years, short-term temperature changes are nevertheless inherently difficult to predict. Nevertheless, underneath all of that short-term natural noise, the steady march of greenhouse gas warming continues ever upward, and will always win out in the end until we do something to change that.

Arguments

Arguments

[DB] Climate4you is a well-known disinformationist site, as has has been illustrated by various SkS blog posts here and here.

[DB] "when you do a preview, are posted images, or referenced images"

If they are in a standard graphic format such as ,jpg, .gif or .png, then yes. Other formats may work, but the Preview function will show what will post. If the Preview shows only an image outline or no image, then no.

Images and grphics contained in .pdf's normally cannot be linked directly (except if they contain embedded hyperlinks). Use a screen capture utility such as MWSnap to extract them, then upload to a hosting service or to a blog of your own to then reference them.

Wiki pages often have images on a root page, so ensure the URL string ends in a graphics format (such as .jpg) before linking.