Christy Exaggerates the Model-Data Discrepancy

Posted on 22 June 2012 by dana1981

John Christy, climate scientist at the University of Alabama at Huntsviile (UAH) was recently interviewed for an article in al.com (Alabama local news). The premise of the article was reasonable, focusing on the fact that the hot month of May 2012 is by itself not evidence of global warming. However, hot weather will of course become more commonplace as the planet warms and global warming "loads the dice"; a fact which Christy and the article neglected to mention.

As has become an unfortunate habit of his, Christy also made a number of misleading claims in the interview. The primary assertion which became the main focus of the article was similar to some other recent claims from climate contrarians - an exaggeration of the discrepancy between global climate models and observational measurements.

Models vs. Data

Specifically regarding the model-data discrepancy, Christy claimed:

"It appears the climate is just not as sensitive to the extra CO2 as the models would predict. We can compare what the models said would happen over the past 34 years with what actually happened, and the models exaggerate it by a factor of two on average."

To arrive at this conclusion, Christy appears to have used the Coupled Model Intercomparison Project Phase 5 (CMIP5) model runs and compared them to the observational data, presumably from UAH estimates of the temperature of the lower troposphere (TLT).

The CMIP5 average model surface temperature simulations, based on the radiative forcings from the IPCC Representative Concentration Pathways (RCPs), are discussed and plotted by Bob Tisdale here. From 1978 through 2011 they simulate a surface warming trend of a bit more than 0.2°C per decade (~0.23°C/decade). The observed surface temperature trend over that period is approximately 0.16°C/decade, or 0.17°C/decade once short-term effects are removed, per Foster and Rahmstorf (2011). According to UAH the TLT trend is approximately 0.14°C/decade.

So while there is a small discrepancy here, it is closer to a factor of 1.3 and no more than 1.6 over the past 34 years, not the factor of 2 asserted by Christy. However, it is possible to find a larger average CMIP5 warming trend (and thus a larger apparent model-data discrepancy), depending on what starting date is (cherry)picked.

Hiatus Decade Discrepancy

The greater discrepancy over shorter timeframe arises partly from non-climatic interannual variability - particularly the El Niño cycle - which is by its nature unpredictable and has been dominated by a preponderance of La Niña events over the past decade. Over longer timeframes such factors will even out. Other climate-related factors with a cooling influence over the past decade include an extended solar minimum, rising aerosols emissions, and increased heat storage in the deep oceans.

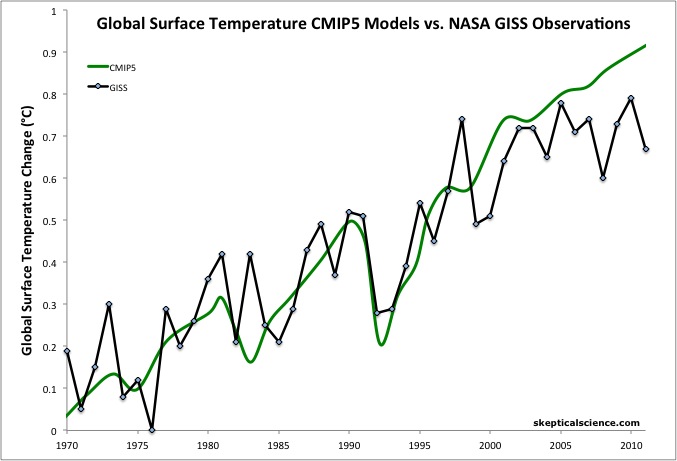

While the RCP scenarios do appear to account for some of these effects by estimating very little increase in total radiative forcing from 2000 through 2011, the average CMIP5-simulated surface temperatures nevertheless continue to rise over this period (Figure 1).

Figure 1: Average global temperature surface anomaly multi-model mean from CMIP5 (green) and as measured by the NASA Goddard Institute for Space Studies (GISS black). Most of this figure is a hindcast of models fitting past temperature data.

The largest contributing factor to the discrepancy is likely to be the preponderance of La Niña events over the past decade. The CMIP5 models do not simulate the El Niño Southern Oscillation (ENSO) by using observational data - for example, 1998 is not an anomalously hot year in CMIP5 models, which it was in reality due to a very strong El Niño (Figure 1). Thus the CMIP5 models also do not account for the short-term surface cooling effect associated with the recent La Niña events, as more heat has been funneled into the deeper oceans during the current 'hiatus decade'.

There may be other factors contributing to the short-term average model-data discrepancy. For example, it's possible that the transient climate sensitivity of the average CMIP5 model is a bit too high, and/or that the ocean heat mixing efficiency in the models is too high (as suggested by James Hansen), and/or that the recent cooling effects of aerosols are not adequately accounted for, etc. However, thus far the relatively small discrepancy has only persisted for approximately one decade, so it's rather early to jump to conclusions.

More to the point, in his interview John Christy specifically said that models exaggerated the observed warming by a factor of two "over the past 34 years." However, this claim is untrue. The factor of two discrepancy only exists over approximately the past decade, and using the methodology from Foster and Rahmstorf (2011), the preponderance of La Niñas alone over the past decade accounts for roughly half of that discrepancy (removing the effects of ENSO brings the observed GISS trend up from ~0.1°C/decade to ~0.15°C/decade, compared to the ~0.2°C/decade average CMIP5 simulation).

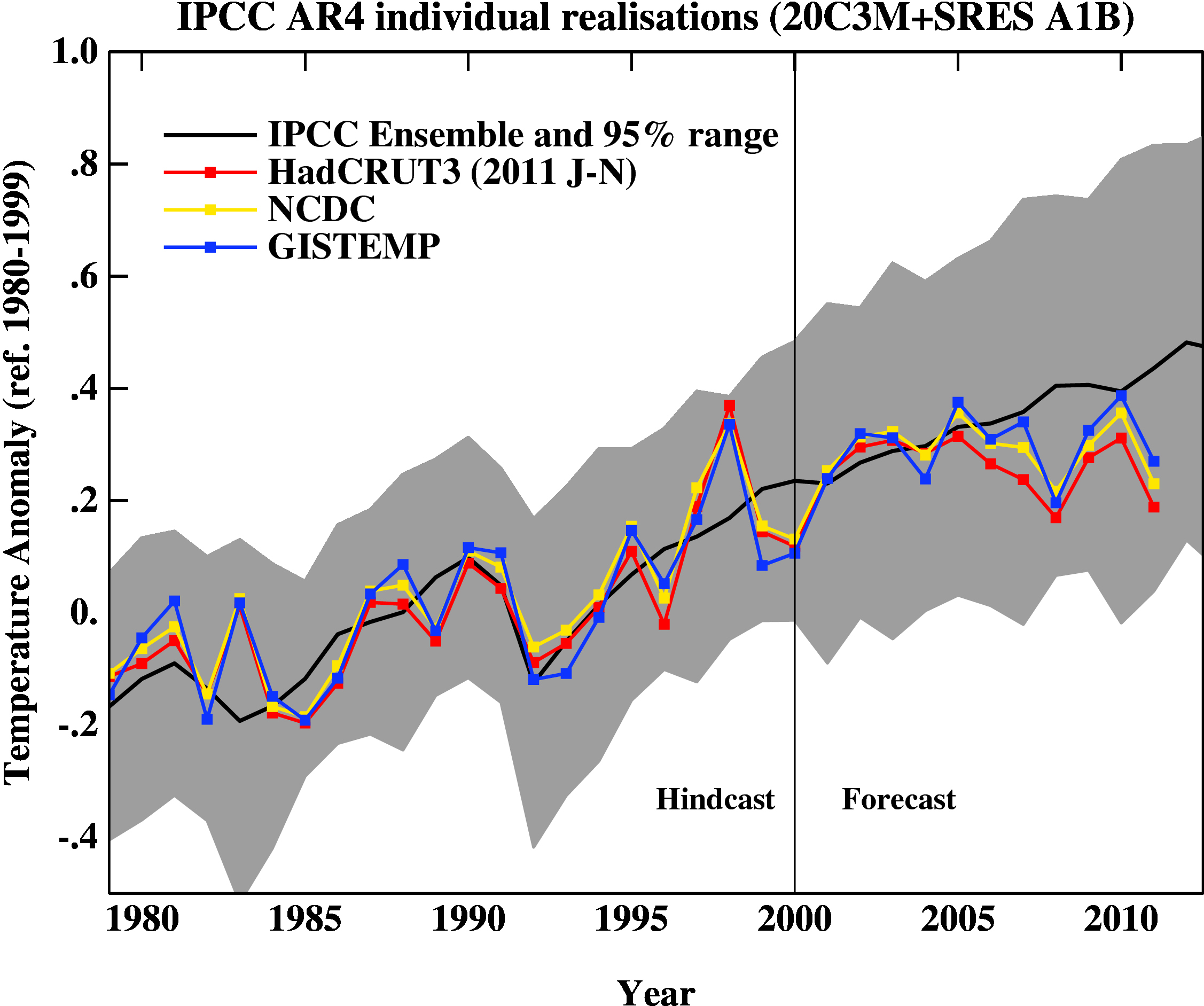

Ignoring the Envelope

It's also important to note that we're only examining the model mean simulation here and not the full range of model simulations. While the observed trend over the past decade is less than the average model simulation, it is likely within the envelope of all model simulations, as was the case in the 2007 IPCC report (Figure 2).

Figure 2: 2007 IPCC report model ensemble mean (black) and 95% individual model run envelope (grey) vs. surface temperature anomal from GISS (blue), NOAA (yellow), and HadCRUT3 (red).

Essentially Christy is focusing on the black line in Figure 2 while ignoring the grey model envelope.

Ultimately while Christy infers that the discrepancy between the data and average model run over the past decade indicates that climate sensitivity is low, in reality it more likely indicates that we are in the midst of a 'hiatus decade' where heat is funneled into the deeper oceans where it is poised to come back and haunt us.

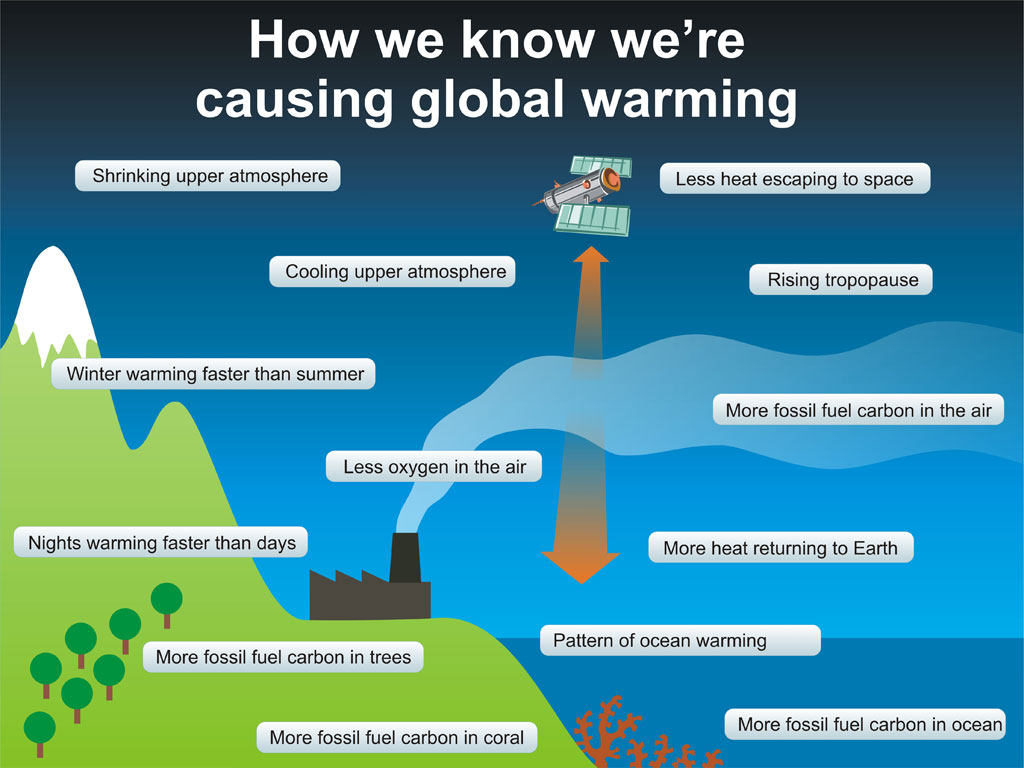

Human Warming Fingerprints

Christy's misleading statements in this interview were not limited to exaggerating the model-data discrepancy. For example,

"...data collected over the past 130 years, as well as satellite data, show a pattern not quite consistent with popular views on global warming."

Christy is incorrect on this issue. There have been dozens of observed anthropogenic 'fingerprints' - climate changes which are wholly consistent with and/or specifically indicative of a human cause (Figure 3).

Figure 3: 'Fingerprints' of human-caused global warming.

Satellites vs. Surface Stations

Although the two show very similar rates of near-surface warming, Christy pooh-poohed the accuracy of the surface temperature record and played up the accuracy of his own UAH TLT record.

"These [satellite] measurements, he says, are much more accurate than relying solely on ground measurements.

"We're measuring the mass, the deep layer of the atmosphere. You can think of this layer as a reservoir of heat. It gives you a better indication than just surface measurements, which can be influenced by so many factors, such as wind and currents, and things like urbanization."

Christy adds that using the same thermometer, on the same spacecraft, adds to measurement accuracy. "It's using the same thermometer to measure the world," he says."

There are a number of problems with this quote. First of all, the accuracy of the surface temperature record has been confirmed time and time again.

Second, there are a number of challenging biases in the satellite record which must be corrected and accounted for. For example, the orbital drift of satellites, the fact that they have to peer down through all layers of the atmosphere but need to isolate measurements from each individual layer, etc. It's also worth noting here that while Christy has promised to make the UAH source code available to the public, which people have been requesting for over two years, the code has not yet been made public.

Third, contrary to Christy's assertion that TLT measurements are made by a single satellite, they have actually been made by several different satellites over the years, because the measurement instruments don't have lifetimes of 34 years. Splicing together the measurements from various different satellite instruments is another of the challenging biases which must be addressed when dealing with satellite data.

All in all, it is entirely plausible that there are remaining biases in the UAH data which Christy and co. have not addressed, whereas many different studies have confirmed the accuracy of the surface record, and the various different surface temperature records (GISS, NOAA, HadCRUT4, BEST, etc.) are all in strong agreement.

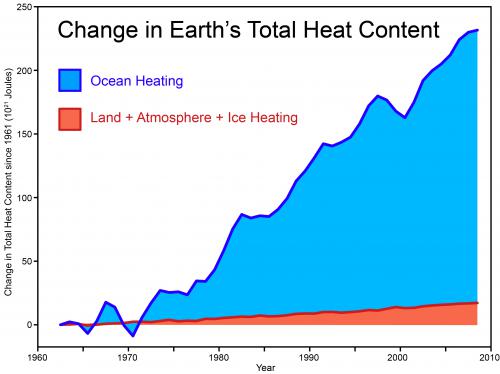

Tip of the Global Warming Iceberg

It's also important to put the warming discussed here in context. We are only focusing on the warming of surface air, whereas about 90% of the total warming of the Earth goes into the oceans. Overall global warming has continued unabated (Figure 4).

Figure 4: Total global heat content, data from Church et al 2011.

Over the past 20 years, 14 x 1022 Joules of heat have gone into the oceans, which is the equivalent of 3.7 Little Boy Hiroshima atomic bomb detonations in the ocean per second, every second over the past two decades. Over the past decade we're up to 4 detonations per second. Focusing exclusively on surface temperatures, as Christy has in this interview, neglects that immense amount of global warming.

Local Aerosol Cooling vs. Global Warming

Despite the premise of the article - that global warming can't be blamed for short-term temperature changes - Christy nevertheless loses focus on long-term global warming and emphasizes local temperature changes.

"Data compiled over the past 130 years show some warming over the northern hemisphere, but actually show a very slight cooling trend for the southeastern U.S., Christy says."

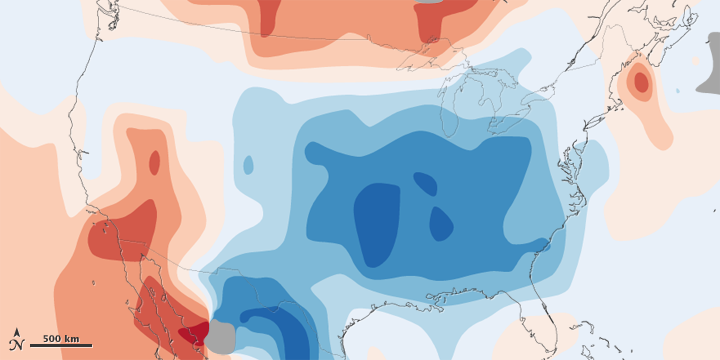

Why this assertion is relevant to the topic at hand is something of a mystery. However, as NASA Earth Observatory shows, the "warming hole" in the southeastern USA is due to local aerosol emissions from coal power plants, which are relatively common in this region of the country (Figures 5 and 6).

![]()

Figure 5: 1970-1990 aerosol loading of the atmosphere over the lower 48 United States and estimated associated surface air temperature change.

![]()

Figure 6: Observed total surface temperature change over the lower 48 United States from 1930 to 1990.

Christy does not mention this local aerosol loading of the atmosphere, however. Rather than providing this explanation or explaining the important difference between local and global temperatures, Christy leaves the statement about local southeastern USA cooling unqualified and unexplained, which is something of a disservice to his Alabama readers.

Exaggerating Discrepancies to Downplay Concern

To summarize, in this interview Christy has exaggerated the discrepancy between model averages and data, failed to explore the many potential reasons behind that short-term discrepancy, failed to consider the envelope of model runs, neglected the many 'fingerprints' of human-caused global warming, ignored the vast and continued heating of the oceans and planet as a whole, and focused on short-term temperature changes without examining the causes of those changes or putting them in a global context.

The overall tone of the article has likely served to sow the seeds of doubt about human-caused global warming in the minds of Alabama readers. Christy also made various claims about the "resiliency" of the Earth to climate change to further sow these seeds.

Christy further downplayed Alabaman climate change concerns by claiming that global warming will lead to fewer tornadoes, and that the local tornado outbreak of 1932 caused more deaths than recent tornadoes. However, as research meteorologist Harold Brooks discusses, the impact of climate change on tornadoes is not as clear as Christy claims - "there will undoubtedly be more years with many bad storms, as well as years with relatively few." And quite obviously, there were more tornado-related deaths in 1932 because technology and warning times have greatly improved over the past 8 decades, unrelated to climate change.

Unfortunately when we look at the totality of the evidence for human-caused global warming and associated future impacts, the big picture is not nearly so rosy as the one Christy's selective vision paints.

Arguments

Arguments

- I have deleted the FAR, SAR and TAR graphic from Figure TS.26 in Figure 1 because they make the diagram more difficult to understand and because they are already presented elsewhere in AR4.

- The temperature data shown in AR4 Figure 1.1 does not correspond to that shown in Figure TS.26. The Figure 1.1 data appear to be approximately 0.026 °C higher than the corresponding data in Figure TS.26. I have assumed that this is a typographical error in AR4. Nevertheless, I have used the same 0.026 °C adjustment to the HadCRUT3 data in required for AR4 Figure 1.1 for Figure TS.26. My adjusted HadCRUT3 data points are typically higher than those presented in AR4 Figure TS.26.

- Despite items (1) and (2) above, there is very good agreement between the smoothed data in TS.26 and the adjusted HadCRUT3 data presented in Figure 1, particularly for the 1995-2005 period.

- It should be noted that AR4 uses a 13-point filter to smooth the data whereas HadCRUT uses a 21-point filter but these filters are stated by AR4 to give similar results.

Dana, comparing your data and Gavin's projections in the RC chart with the official AR4 projections in Figure 1, the following points are evident:- There is a huge discrepancy between the projected temperature and real-world temperature.

- Real-world temperature (smoothed HadCRUT3) is tracking below the lower estimates for the Commitment emissions scenario., i.e., emissions-held-at-year-2000 level in the AR4 chart. There is no commitment scenario in the RC chart to allow this comparison.

- The smoothed curve is significantly below the estimates for the A2, A1B and B1 emissions scenarios. Furthermore, this curve is below the error bars for these scenarios, yet Gavin shows this data to be well within his error bands.

- The emissions scenarios and their corresponding temperature outcomes are clearly shown in the AR4 chart. Scenarios A2, A1B and B1 are included in the AR4 chart – scenario A1B is the business-as-usual scenario. None of these scenarios are shown in the RC chart.

- The RC chart shows real world temperatures compared with predictions from models that are an "ensemble of opportunity". Consequently, Gavin Schmidt states, "Thus while they do span a large range of possible situations, the average of these simulations is not 'truth" [My emphasis].

In summary, I suggest that your use of the RC chart is a poor comparison. I suggest that Figure TS.26 from AR4 is useful for comparing real world temperature data with the relevant emissions scenarios. To the contrary, Gavin uses a chart which compares real world temperature data with average model data for which he states does not represent "truth" I suggest that this is not much of a comparison. Therefore, why use the RC data? I conclude that the AR4 chart is much more informative. It is evident that Christy is correct in highlighting a possible discrepancy and there is certainly a discrepancy between the data presented by you and that presented in the official AR4 charts.- Figure 2 hides the 2-sigma trend in real-world temperatures in a mass of grey, whereas the TS.26 shows this discrepancy very clearly.

- Figure 2 does not show smoothed data. Once again this tends to hide the discrepancy between real-world temperatures and model projections.

- Figure 2 omits the Commitment Scenario that is presented in TS.26. This scenario should be shown in any projections diagram because it is a very useful benchmark for comparing the accuracy of the projections.

If I were to use the AR4 standard terms and definitions to define the 2-sigma confidence levels, Box TS.1 of AR4 would describe the current model results as, "Very low confidence" and the chance of being correct as, "Less than 1 out of 10."[DB] Cease with your dissembling. Figure 2 (taken from this RC post) are for global model runs. The IPCC figure you cite a portion of is for NH only. You compare apples and porcupines.

Continuance of this posting behaviour of dissembling will result in an immediate cessation of posting rights.

[DB] "Both the RC Figure 2 and my version of AR4 Figure of TS.26 refer to global temperatures."

Still wrong. Your graphic at 28 above is clearly labeled as AR4 Figure 6.10c (IPCC, 2007). Explanatory text of that graphic:

You would be better served by admitting that you misunderstood the applicability of the graphic you referenced and also (un)intentionally misrepresented how you presented it. Since you persist in your error the only conclusion one can draw is that the error is willful; the intent, to dissemble and mislead.

Please note that posting comments here at SkS is a privilege, not a right. This privilege can and will be rescinded if the posting individual continues to treat adherence to the Comments Policy as optional, rather than the mandatory condition of participating in this online forum.

Moderating this site is a tiresome chore, particularly when commentators repeatedly submit offensive, off-topic posts or intentionally misleading comments and graphics or simply make things up. We really appreciate people's cooperation in abiding by the Comments Policy, which is largely responsible for the quality of this site.

Finally, please understand that moderation policies are not open for discussion. If you find yourself incapable of abiding by these common set of rules that everyone else observes, then a change of venues is in the offing.

Please take the time to review the policy and ensure future comments are in full compliance with it. Thanks for your understanding and compliance in this matter, as no further warnings shall be given.